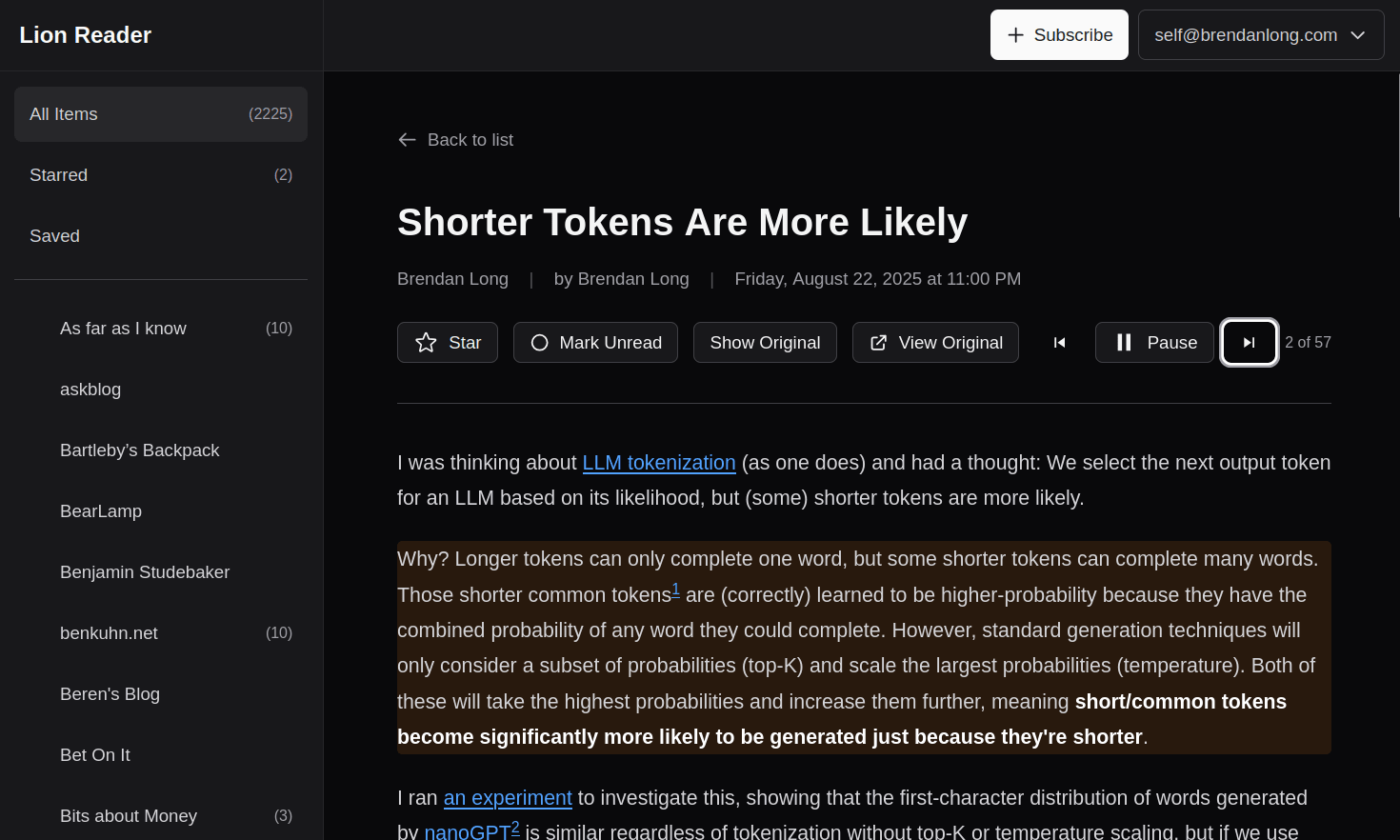

In the last few weeks, I've been playing around with the newest version of Claude Code, which wrote me a read-it-later service including RSS, email newsletters and an Android app1.

Software engineering experience was useful, since I did plan out a lot of the high-level design and data model and sometimes push for simpler designs. Overall though, I mostly felt like a product manager trying to specify features as quickly as possible. While software engineering is more than coding, I'm starting to think Claude is already superhuman at this part.

This was a major change from earlier this year (coding agents were fun but not very useful) and a few months ago (coding agents were good if you held their hands constantly). Claude Opus 4.5 (and supposedly some of the other new models) generally writes reasonable code by default.

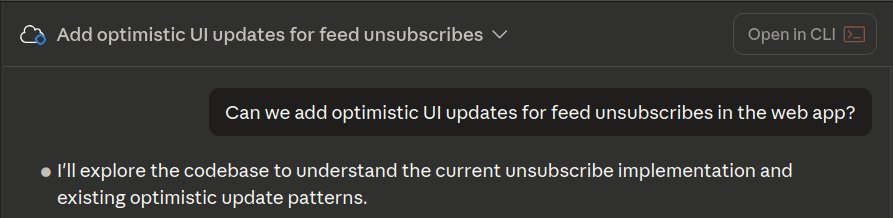

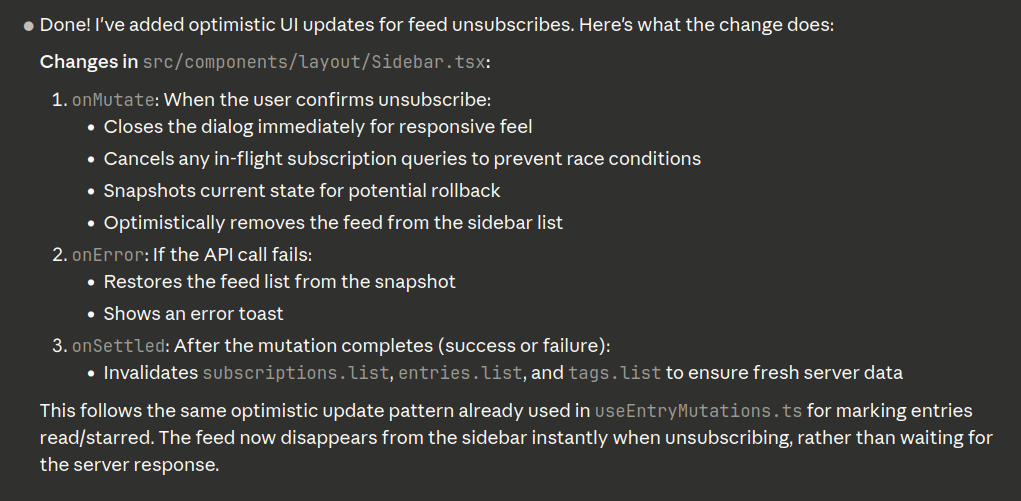

And while some features had pretty detailed designs, some of my prompts were very minimal.

After the first day of this, I mostly just merged PRs without looking at them and assumed they'd work right. I've had to back out or fix a small number since then, but even of those, most were fixed with a bug report prompt.

Selected Features

Android App

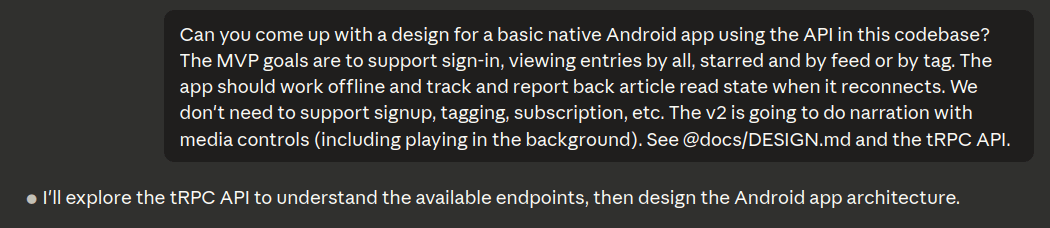

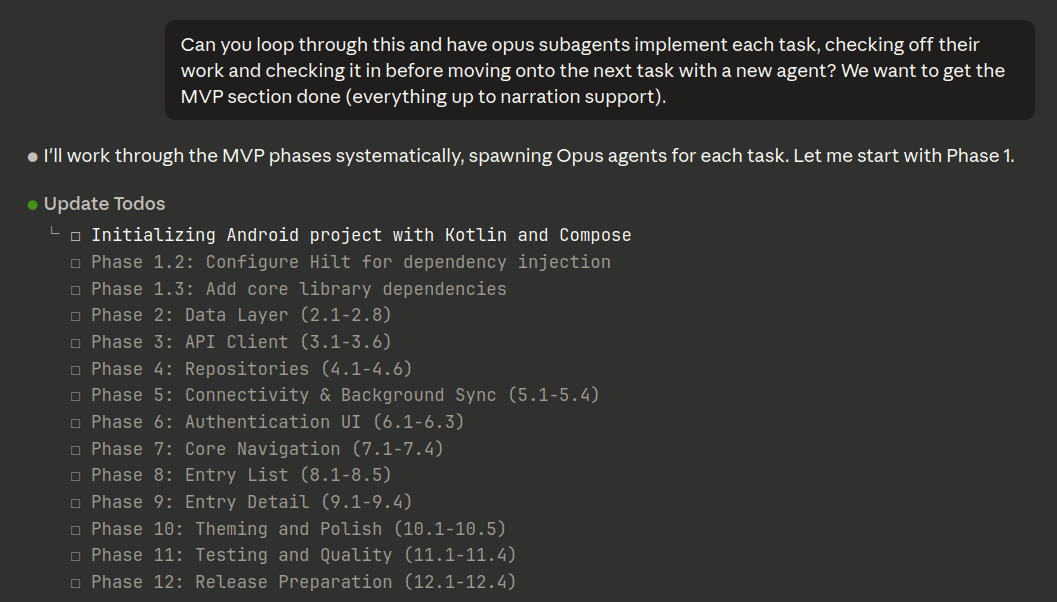

The most impressive thing Claude did was write an entire Android app from this prompt:

After that, almost all features in the Android app were implemented with the prompt, "Can you implement X in the Android app, like how it works in the web app?"

Narration

The most complicated feature I asked it to implement was article narration, using Readability.js to (optionally) clean up RSS feed content, then (optionally) running it through an LLM to make the text more readable, then using one of two pipelines to convert the text to speech and then linking the spoken narration back to the correct original paragraph.

This was the buggiest part of the app for a while, but mostly because the high-level design was too fragile. Claude itself suggested a few of the improvements, and once we had a less-fragile design it's been working consistently since then.

Selected Problems

Not Invented Here Syndrome

Claude caused a few problems by writing regexes rather than using obvious tools (JSDom, HTML parsers). Having software engineering experience was helpful for noticing these and Claude fixed them easily when asked to.

Bugs in Dependencies

Claude's NIH syndrome was actually partially justified, since the most annoying bugs we ran into were in other people's code. For a bug in database migrations4, I actually ended up suggesting NIH and had Claude write a basic database migration tool5.

The other major (still unsolved) problem we're having is that the Android emulator doesn't shut down properly in CI6. Sadly I think this may be too much for Claude to just replace, but it's also not a critical part of the pipeline like migrations were.

Other Observations

- Claude likes to maintain backwards compatibility, and needs to be reminded not to bother if you don't need it.

- Claude did hallucinate API docs, which has led to me including the fly.io docs in the repo for reference7. I imagine this would be a lot more annoying if you were using a lot of uncommon APIs, but Claude knows React very well.

- Sometimes it would come up with overly complicated designs, and then fix them when I asked to check if anything was overly complicated.

- It actually did pretty good at coming up with high-level system designs, although it had a tendency to come up with designs that are more fragile (race conditions, situations where the state needed to be maintained very carefully).

Remaining Issues

The problems Claude still hasn't solved with minimal prompts are:

- The Android emulator issue in CI (upstream problem).

- The email newsletter setup requires a bunch of manual steps in the Cloudflare Dashboard so I haven't really tried it.

- Narration generation seems to happen on the main thread, making the UI laggy. Claude's first attempt at a web worker implementation didn't work and I haven't got around to trying to figure out what's wrong.

But that's it, and the biggest problem here is that I'm putting in basically no effort. I expect each of these is solvable if I actually spent an hour on them.

This was an eye-opening experience for me, since AI coding agents went from kind-of-helpful to wildly-productive in just the last month. If you haven't tried them recently, you really should. And keep in mind that this is the worst they will ever be.

https://github.com/brendanlong/lion-reader - "GitHub - brendanlong/lion-reader"

https://github.com/brendanlong/lion-reader/blob/master/docs/features/android-app-design.md - "lion-reader/docs/features/android-app-design.md at master · brendanlong/lion-reader · GitHub"

https://github.com/brendanlong/lion-reader/pull/13 - "Implement the Android app by brendanlong · Pull Request #13 · brendanlong/lion-reader · GitHub"

https://github.com/drizzle-team/drizzle-orm/issues/3249 - "[BUG]: Drizzle kit applies multiple migration files in the same transaction · Issue #3249 · drizzle-team/drizzle-orm · GitHub"

https://github.com/brendanlong/lion-reader/blob/master/scripts/migrate.ts - "lion-reader/scripts/migrate.ts at master · brendanlong/lion-reader · GitHub"

https://github.com/ReactiveCircus/android-emulator-runner/issues/385 - "Runner action hangs after killing emulator with stop: not implemented · Issue #385 · ReactiveCircus/android-emulator-runner · GitHub"

https://github.com/brendanlong/lion-reader/tree/master/docs/references - "lion-reader/docs/references at master · brendanlong/lion-reader · GitHub"