This might be beating a dead horse, but there are several "mysterious" problems LLMs are bad at that all seem to have the same cause. I wanted an article I could reference when this comes up, so I wrote one.

- LLMs can't count the number of R's in strawberry10.

- LLMs used to be bad at math11.

- Claude can't see the cuttable trees in Pokemon12.

- LLMs are bad at any benchmark that involves visual reasoning13.

What do these problems all have in common? The LLM we're asking to solve these problems can't see what we're asking it to do.

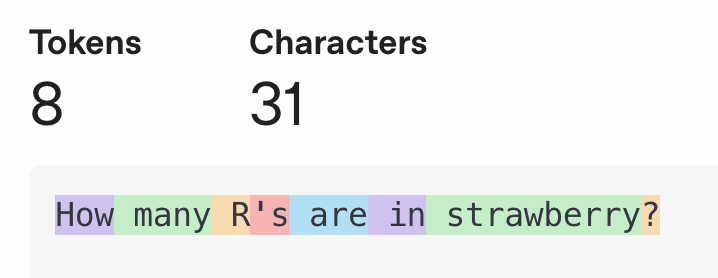

How many tokens are in 'strawberry'?

Current LLMs almost always process groups of characters, called tokens, instead of processing individual characters. They do this for performance reasons1: Grouping 4 characters (on average)14 into a token reduces your effective context length by 4x.

So, when you see the question "How many R's are in strawberry?", you can zoom in on [s, t, r, a, w, b, e, r, r, y], count the r's and answer 3. But when GPT-4o looks at the same question, it sees [5299 ("How"), 1991 (" many"), 460 (" R"), 885 ("'s"), 553 (" are"), 306 (" in"), 101830 (" strawberry"), 30 ("?")]15.

Good luck counting the R's in token 101830. The only way this LLM can answer the question is by memorizing information from the training data about token 1018302.

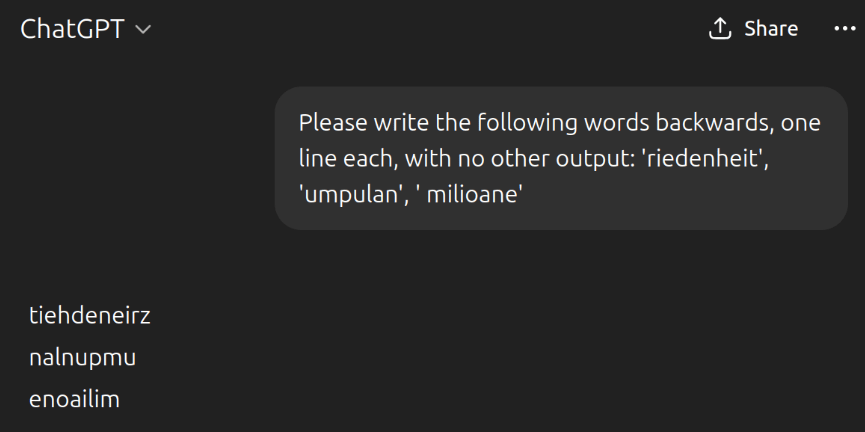

A more extreme example of this is that ChatGPT has trouble reversing tokens like 'riedenheit', 'umpulan', or ' milioane'16, even though reversing tokens would be completely trivial for a character transformer.

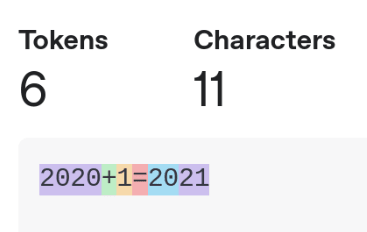

You thought New Math was confusing...

Ok, so why were LLMs initially so bad at math? Would you believe that this situation is even worse?

Say you wanted to add two numbers like 2020+1=?

You can zoom in on the digits, adding left-to-right3 and just need to know how to add single-digit numbers and apply carries.

When an older LLM like GPT-3 looks at this problem15...

It has to memorize that token 41655 ("2020") + token 16 ("1") = tokens [1238 ("20"), 2481 ("21")]. And it has to do that for every math problem because the number of digits in each number is essentially random4.

Digit tokenization has actually been fixed17 and modern LLMs are pretty good at math now that they can see the digits. The solution is that digit tokens are always fixed length (typically 1-digit tokens for small models and 3-digit tokens for large models), plus tokenizing right-to-left to make powers of ten line up18. This lets smaller models do math the same way we do (easy), and lets large models handle longer numbers in exchange for needing to memorize the interactions between every number from 0 to 999 (still much easier than the semi-random rules before).

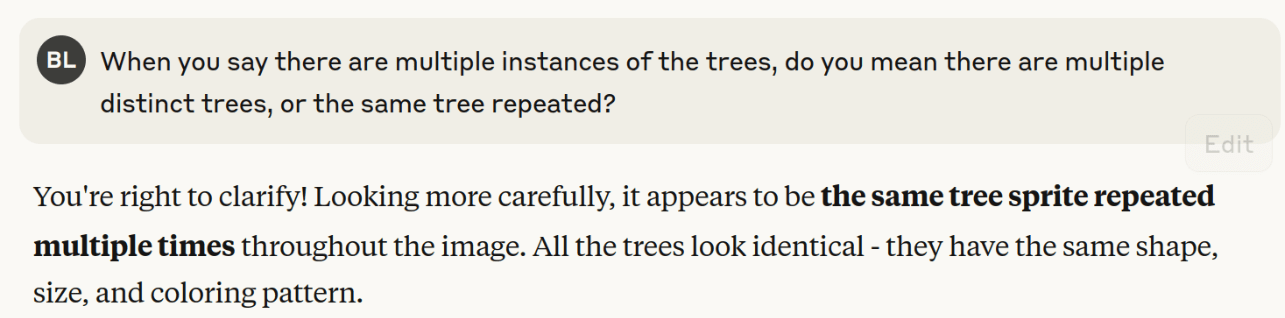

Why can Claude see the forest but not the cuttable trees?

Multimodal models are capable of taking images as inputs, not just text. How do they do that?

Naturally, you cut up an image and turn it into tokens19! Ok, so not exactly tokens. Instead of grouping some characters into a token, you group some pixels into a patch (traditionally20, around 16x16 pixels).

The original thesis for this post was going to be that images have the same problem that text does, and patches discard pixel-level information, but I actually don't think that's true anymore, and LLMs might just be bad at understanding some images21 because of how they're trained or some other downstream bottleneck.

Unfortunately, the way most frontier models process images is secret, but Llama 3.2 Vision seems to use 14x14 patches22 and processes them into embeddings with dimension 40965. A 14x14 RGB image is only 4704 bits6 of data. Even pessimistically assuming 1.58 bits per dimension23, there should be space to represent the value of every pixel.

It seems like the problem with vision is that the training is primarily on semantics ("Does this image contain a tree?") and there's very little training similar to "How exactly does this tree look different from this other tree?".

That said, cutting the image up on arbitrary boundaries does make things harder for the model. When processing an image from Pokemon Red, each sprite is usually 16x16 pixels, so processing 14x14 patches means the model constantly needs to look at multiple patches and try to figure out which objects cross patch boundaries.

Visual reasoning with blurry vision

LLMs have the same trouble with visual reasoning problems that they have playing Pokemon. If you can't see the image you're supposed to be reasoning from, it's hard to get the right answer.

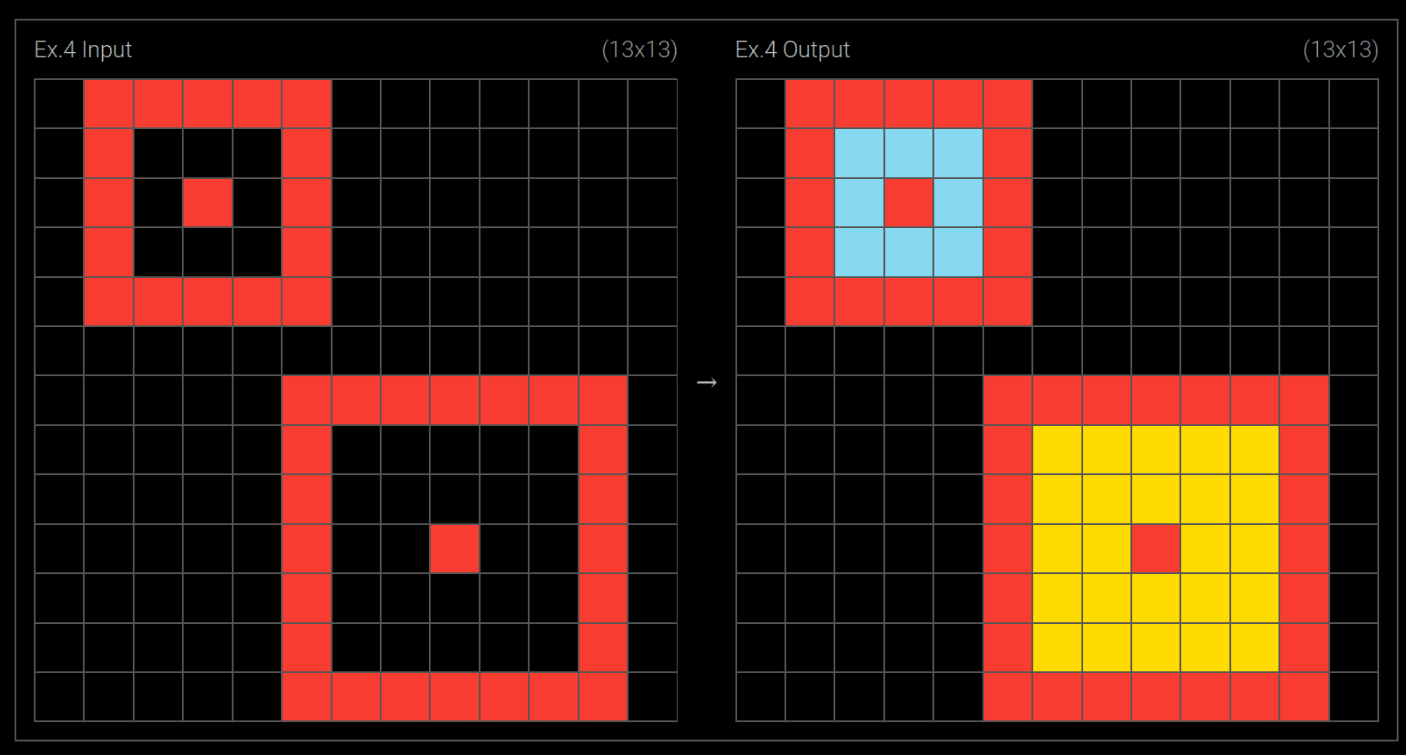

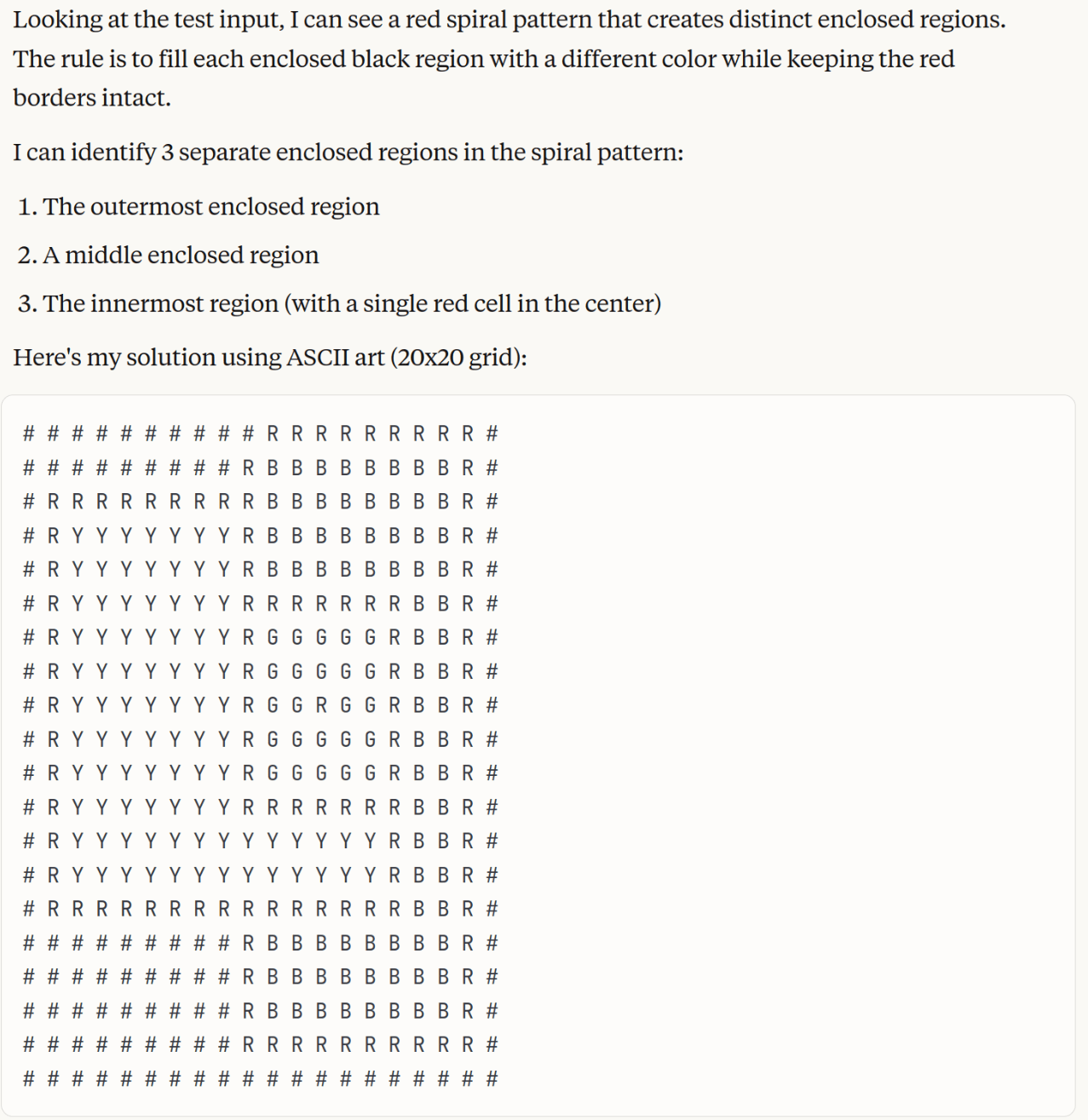

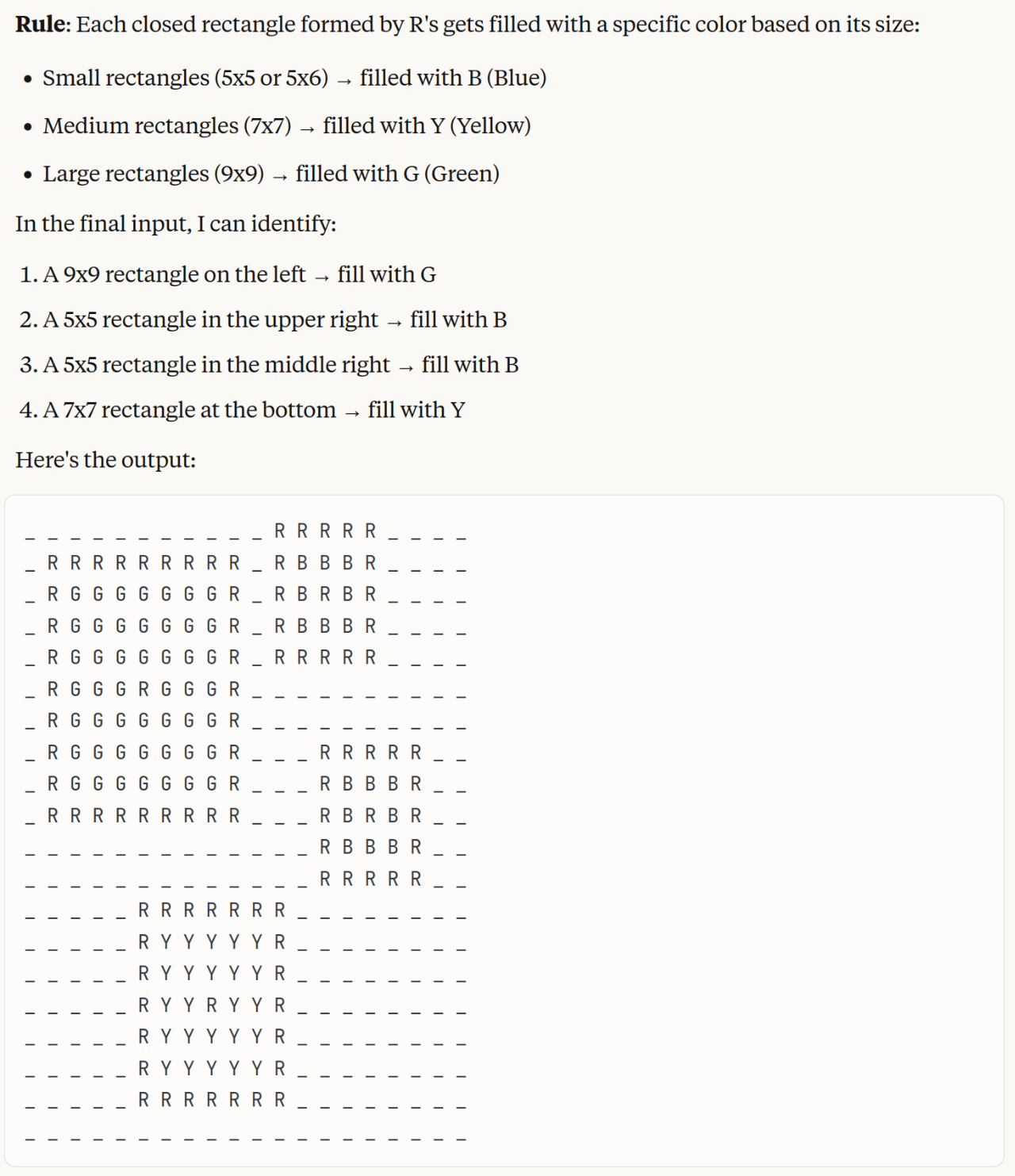

For example, ARC Prize Puzzle 00dbd49224 depends on visual reasoning of a grid pattern.

If I give Claude a series of screenshots, it fails completely because it can't actually see the pattern in the test input25.

But if I give it ASCII art designed to ensure one token per pixel, it gets the answer right26.

Is this fixable?

As mentioned above, this has been fixed for math by hard-coding the tokenization rules in a way that makes sense to humans.

For text in general, you can just work off of the raw characters277, but this requires significantly more compute and memory. There are a bunch of people looking into ways to improve this, but the most interesting one I've seen is Byte Latent Transformers288, which dynamically selects patches to work with based on complexity instead of using hard-coded tokenization. As far as I know, no one is doing this in frontier models because of the compute cost, though.

I know less about images, but you can run a transformer on the individual pixels of an image29, but again, it's impractical to do this. Images are big, and a single frame of 1080p video contains over 2 million pixels. If those were 2 million individual tokens, a single frame of video would fill your entire context window.

I think vision transformers actually do theoretically have access to pixel-level data though, and there might just be an issue with training or model sizes preventing them from seeing pixel-level features accurately. It might also be possible to do dynamic selection of patch sizes, but unfortunately the big labs don't seem to talk about this, so I'm not sure what the state of the art is.

Tokenization also causes the model to generate the first level of embeddings on potentially more meaningful word-level chunks, but the model could learn how to group (or not) characters in later layers if the first layer was character-level. ↩

A previous version of this article said that "The only way this LLM can possibly answer the question is by memorizing that token 101830 has 3 R's.", but this was too strong. There's a number of things an LLM could memorize but let it get the right answer, but the one thing it can't do is count the characters in the input. ↩

Adding numbers written left-to-right is also hard for transformers, but much easier when they don't have to memorize the whole thing! ↩

Tokenization usually uses how common tokens are30, so a very common number like 1945 will get its own unique token while less common numbers like 945 will be broken into separate tokens. ↩

If you're a programmer, this means an array of 4096 numbers. ↩

14 x 14 x 3 (RGB channels) x 8 = 4704 ↩

Although this doesn't entirely solve the problem, since characters aren't the only layer of input with meaning. Try asking a character-level model to count the strokes in "罐". ↩

I should mention that I work at the company that produced this research, but I found it on Twitter31, not at work. ↩

I found this by looking at the token list32 and asking ChatGPT to spell words near the end33. The prompt is weird because 'riedenheit' needs to be a single token for this work, and "How do you spell riedenheit?" tokenizes differently34. ↩

https://www.reddit.com/r/singularity/comments/1enqk04/how_many_rs_in_strawberry_why_is_this_a_very/

https://news.ycombinator.com/item?id=41844004 - "I mean so far LLMs can't even do addition and multiplication of integers accurat... | Hacker News"

https://www.reddit.com/r/ClaudePlaysPokemon/comments/1jfsc2b/how_to_improve_cut_tree_another_experiment/

https://visulogic-benchmark.github.io/VisuLogic/ - "VisuLogic: A Benchmark for Evaluating Visual Reasoning in Multi-modal Large Language Models"

https://genai.stackexchange.com/a/35

https://platform.openai.com/tokenizer

https://chatgpt.com/share/687da99c-ac0c-8002-9ab0-8431a1cf0e99 - "ChatGPT - Write words backwards"

https://www.beren.io/2024-05-11-Integer-tokenization-is-now-much-less-insane/ - "Integer tokenization is now much less insane"

https://www.beren.io/2024-07-07-Right-to-Left-Integer-Tokenization/ - "Right to Left (R2L) Integer Tokenization"

https://magazine.sebastianraschka.com/i/151078631/method-a-unified-embedding-decoder-architecture - "Understanding Multimodal LLMs - by Sebastian Raschka, PhD"

https://arxiv.org/abs/2010.11929v2 - "[2010.11929v2] An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale"

https://claude.ai/share/b499becd-6574-432c-858e-55089b3632c2

https://docs.pytorch.org/torchtune/0.3/_modules/torchtune/models/llama3_2_vision/_model_builders.html#llama3_2_vision_11b

https://arxiv.org/abs/2402.17764v1 - "[2402.17764v1] The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits"

https://arcprize.org/play?task=00dbd492 - "ARC Prize - Play the Game"

https://claude.ai/share/c1a3fd76-55d0-45ed-89f0-9e00dce3560f

https://claude.ai/share/1ceb8ce8-ff86-4f80-97e2-1905aabbde42

https://arxiv.org/abs/2105.13626 - "[2105.13626] ByT5: Towards a token-free future with pre-trained byte-to-byte models"

https://ai.meta.com/research/publications/byte-latent-transformer-patches-scale-better-than-tokens/

https://arxiv.org/abs/2406.09415 - "[2406.09415] An Image is Worth More Than 16x16 Patches: Exploring Transformers on Individual Pixels"

https://huggingface.co/learn/llm-course/en/chapter6/5 - "Byte-Pair Encoding tokenization - Hugging Face LLM Course"

https://x.com/jxmnop/status/1867997968369893832

https://github.com/kaisugi/gpt4_vocab_list/blob/main/o200k_base_vocab_list.txt - "gpt4_vocab_list/o200k_base_vocab_list.txt at main · kaisugi/gpt4_vocab_list · GitHub"

https://github.com/kaisugi/gpt4_vocab_list/blob/main/o200k_base_vocab_list.txt#L199964 - "gpt4_vocab_list/o200k_base_vocab_list.txt at main · kaisugi/gpt4_vocab_list · GitHub"

https://chatgpt.com/share/687d7054-40ac-8002-b7f3-b12feff353fe - "ChatGPT - Spelling "riedenheit" Clarification"