Edit: I no longer fully endorse this post. While the below is true for pretraining, I think RL plausibly causes LLMs to use human-style introspective language in functional ways (I agree with this post3).

It always feels wrong when people post chats where they ask an LLM questions about its internal experiences, how it works, or why it did something, but I had trouble articulating why beyond a vague, "How could they possibly know that?"1. This is my attempt at a better answer:

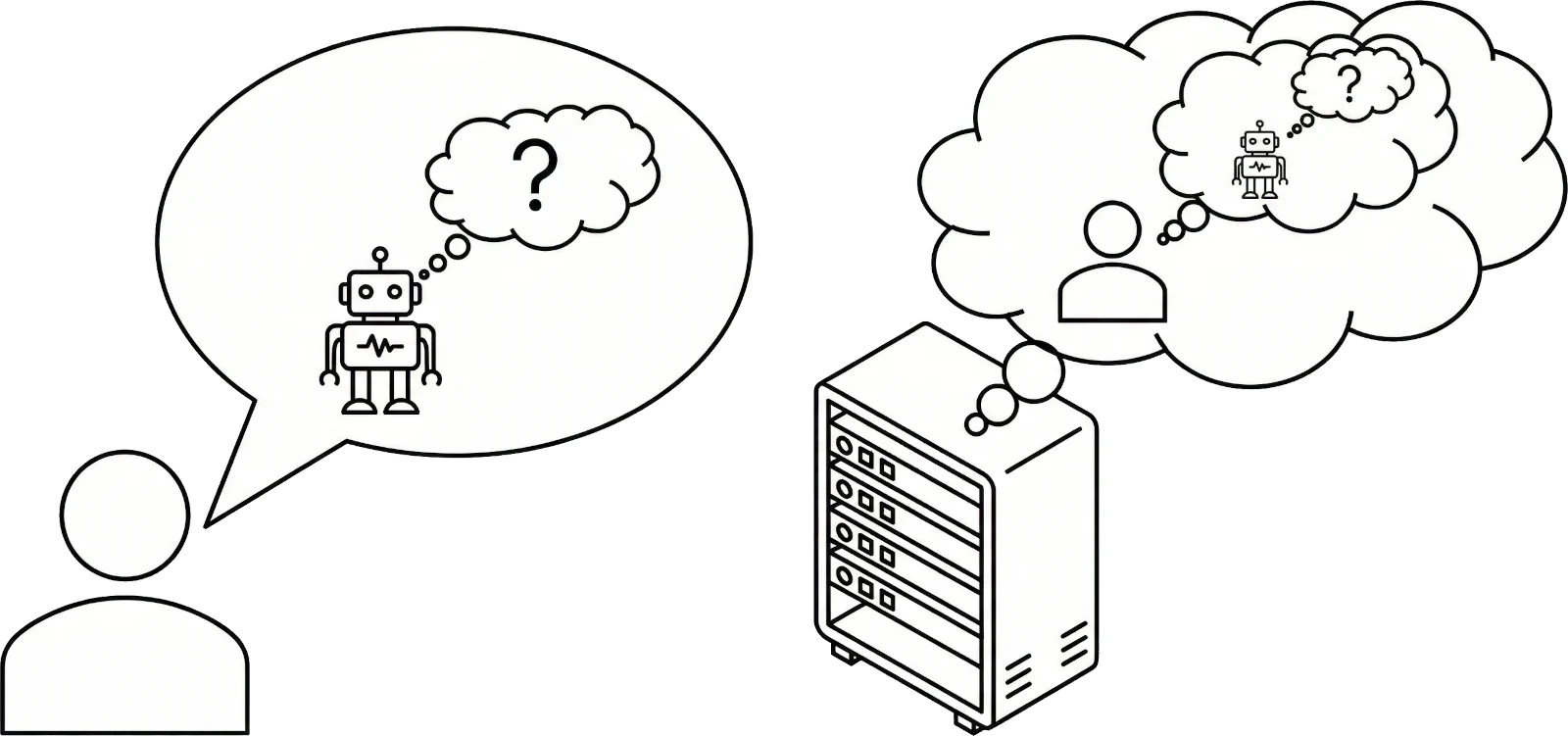

AI training data comes from humans, not AIs, so every piece of training data for "What would an AI say to X?" is from a human pretending to be an AI. The training data does not contain AIs describing their inner experiences or thought processes. Even synthetic training data only contains AIs predicting what a human pretending to be an AI would say. AIs are trained to predict the training data, not to learn unrelated abilities, so we should expect an AI asked to predict the thoughts of an AI to describe the thoughts of a human pretending to be an AI.

This also applies to "How did you do that?". If you ask an AI how it does math, it will dutifully predict how a human pretending to be an AI does math, not how it actually did the math4. If you ask an AI why it can't see the characters in a token5, it will do its best6 but it was never trained to accurately describe not being able to see individual characters2.

These types of AI outputs tend to look surprisingly unsurprising. They always say their inner experiences and thought processes match what humans would expect. This should no longer be surprising7 now that you realize they're trying to predict what a human pretending to be an AI would say.

My knee-jerk reaction is "LLMs don't have access to knowledge about how they work or what their internal weights are", but on reflection I'm not sure of this, and it might be a training/size limitation. In principle, a model should be able to tell you something about its own weights since it could theoretically use weights to both determine its output and describe how it came up with that output. ↩

Although maybe a future version will learn from posts about this and learn to predict what a human who has read this post pretending to be an AI would say. ↩

https://www.lesswrong.com/posts/hopeRDfyAgQc4Ez2g/how-i-stopped-being-sure-llms-are-just-making-up-their - "How I stopped being sure LLMs are just making up their internal experience (but the topic is still confusing) — LessWrong"

https://www.anthropic.com/research/tracing-thoughts-language-model#:~:text=Strikingly%2C%20Claude%20seems%20to%20be%20unaware%20of%20the%20sophisticated%20%22mental%20math%22%20strategies%20that%20it%20learned%20during%20training.%20If%20you%20ask%20how%20it%20figured%20out%20that%2036%2B59%20is%2095%2C%20it%20describes%20the%20standard%20algorithm%20involving%20carrying%20the%201. - "Tracing the thoughts of a large language model \ Anthropic"

https://www.lesswrong.com/posts/uhTN8zqXD9rJam3b7/llms-can-t-see-pixels-or-characters - "LLMs Can't See Pixels or Characters — LessWrong"

https://claude.ai/share/8f306d9e-9c62-4047-9c26-6c4933cb52e9

https://www.lesswrong.com/posts/tWLFWAndSZSYN6rPB/think-like-reality - "Think Like Reality — LessWrong"